Anyone who is even slightly connected to web development (marketing experts, web designers, web developers, etc.) has surely heard something in the air lately about the advantages of A/B testing and the impact it can have on conversion rates.

If you look over the countless articles available about this subject on the web, you will probably get the feeling that you have found the Holy Grail. Especially if you're a designer, you might hope to find some impartial data to replace the long discussions about ‘the color and size of the button,’ as well as the tools to objectively test your ideas on actual human users.

The reality, however, is that, if you simply dig a little more deeply and look a bit more closely into the web (or simply start doing some testing yourself of what is being presented), you’ll find that it’s not all rainbows and unicorns.If you’ve never tested before, you’ll find several 5-20% increases to your bottom line.

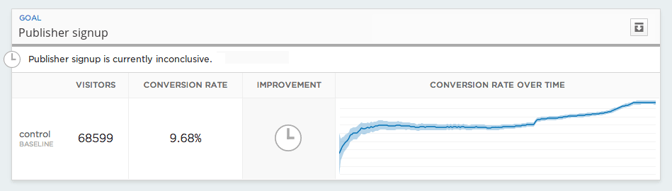

So yes, A/B testing can be a very powerful tool that will provide you with important data, but you should also keep in mind that not every test will end as conclusively as has been stated. In fact, most of them won’t. Here's a visualization of an A/B testing we've done using Optimizely. We were testing if changing a color of a registration button to more visible, will impact the amount of registrations, and will boost the conversion. We were quite convinced the color change will do the trick, but it turned out not to be relevant.

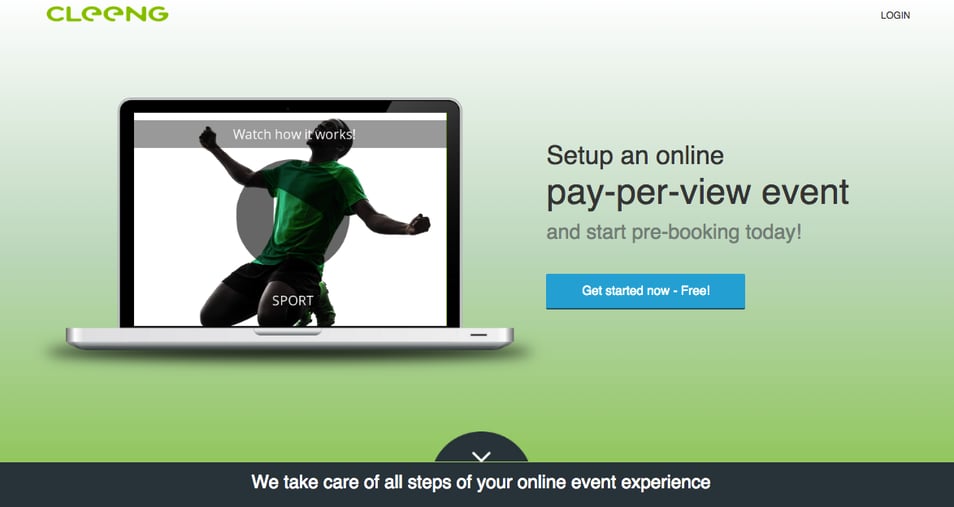

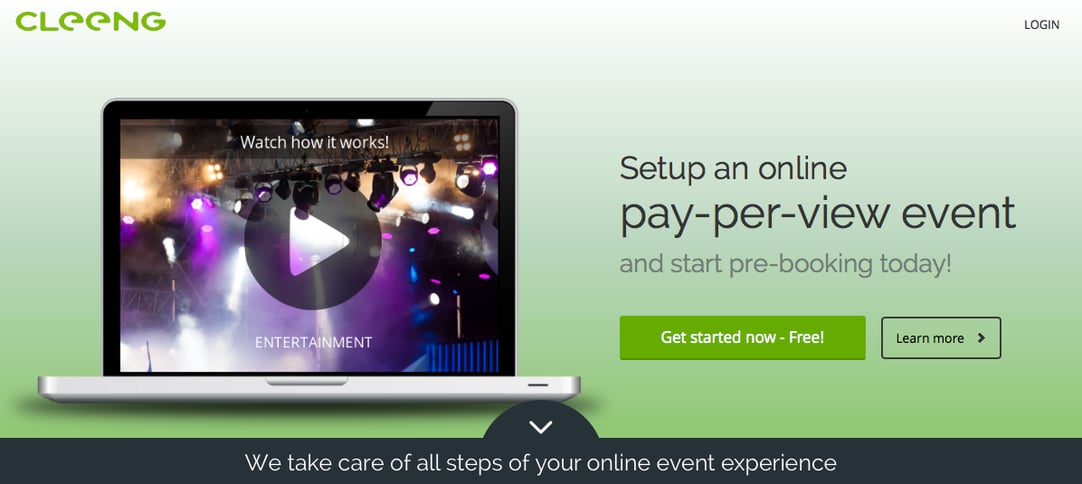

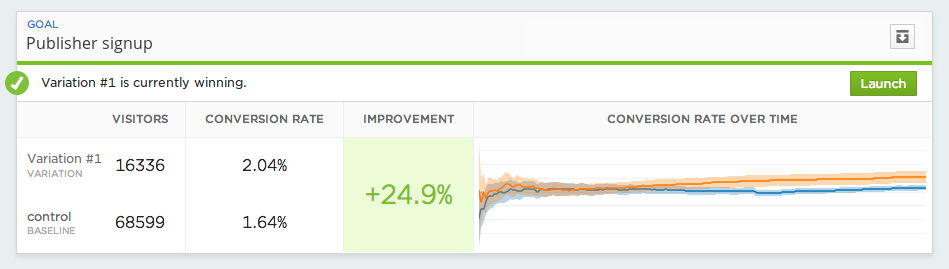

A/B testing was very helpful when improving the performance of a landing page of our Cleeng Live! solution. We've noticed that after adding a button 'Learn more' on the top of the page, the engagement rate with the page went up. As did the conversion. Compare the A and B version ( we stick to the latter one, confirmed by the testing outcome).

Version A - without the 'Learn more' button; to scroll you had to click on the little arrow below the banner picture:

Version B, with a 'Learn more' button

And here are some stats proving the B version worked:

In my experience, to make the most of what A/B testing has to offer, there are a couple of important guidelines that you should remember before you begin your testing:

4 tips to improve A/B testing:

- Thoroughly think your tests through. Tests can be time-consuming. This is especially true if you're testing a new page, in which case you won’t have many unique visitors, so it may take days or even weeks to get sufficient data. Instead, take a step back (or maybe even a few steps) and try to decide whether you expect that particular element to have an influential impact. Bear in mind as well that, unless you're in the big leagues (in terms of users), a 1% increase won’t do much for you.

- Don’t jump the gun. Be patient and – just like a good scientist - don’t reach any conclusions before the test ends. Some tools will start to show you very early on that one variation is better than the other(s), but when the full data set arrives, it may suddenly and completely change the outcome. Some experienced users even suggest that you should refrain entirely from checking the results before the test ends so you don’t get tempted to declare a winner too soon.

- Take one at a time. Decide on one goal. Resist the temptation to test everything all at once because it won’t lead anywhere. It’s best to focus on one thing you want to improve on, and iterate on that.

- Don’t waste your time. Do your research. There’s an excellent chance that what you want to test has already been tested in every way imaginable. Of course, each site is unique in its own way, but general tendencies have likely already been found. So spend half an hour googling to determine whether it’s really worth testing, or whether someone has already established that the impact of a particular element – whether it’s a message, info about a discount or a color of a button - is really so significant. If you can’t find any conclusive answers, it may at least point you in a particular direction to start your testing.

Don't forget that's just the tip of the iceberg. Further, to follow the advice of Jakob Nielsen, "you also need to complement A/B split tests with user research to identify true causes and develop well informed design variations".

That's my experience, yet, i'd be interested to hear more about your perspective too. Please share as well your tips, so we can make A/B testing better for us all.